Imaging tests: Using them wisely

Follow me at @ashishkjha

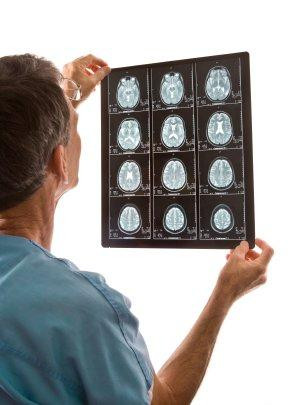

Half a century ago, physicians had few options for diagnostic tests to obtain images of the body. Worried about a brain tumor? A physician might order a pneumo-encephalogram (PEG), which entailed injecting air into the spinal cord and taking x-rays of the head, hoping to spot an abnormality. Tests like these were painful and ineffective, leading physicians to shy away from excessive imaging. The last five decades have seen dramatic progress in technology and innovation, but not without consequences.

The upside — and downside — of innovation in imaging

In the 1970s, CT scans became available. For the first time, physicians could visualize the living brain without opening the skull. The march of diagnostic innovation continued into the 1980s with the advent of the MRI and into the 1990s with the rise of the PET scanner. By the year 2000, the American physician had a broad set of diagnostic tools with which to visualize and evaluate every body part. These breakthroughs have been lifesaving, allowing us to identify lung cancers while still curable and diagnose appendicitis without having to perform surgery first. Given how easy (and painless) these tests have become, they have become commonplace.

But this proliferation comes at a cost. Most obvious is the financial cost, often into the thousands of dollars for each test. But there are clinical costs as well, such as the meaningful amount of radiation exposure patients get with each CT scan. And finally, many of these tests reveal information that clinicians don't know how to interpret. For instance, one might find a mass incidentally during a CT scan that is likely benign — but having seen it, clinicians often feel compelled to biopsy the tissue, subjecting patients to further risk with unclear benefit. And yet, the number of imaging tests done each year continues to climb and Americans receive more MRIs and CT scans, per capita, than nearly any other nation in the world.

A new model for medical imaging

In a recent issue of The New England Journal of Medicine, Dr. Daniel Durand and colleagues laid out an approach to curtailing unnecessary testing. The current testing process is often inefficient and frustrating. The large number of tests available can be confusing to clinicians — and the ease of ordering has made multiple tests (an x-ray followed by an ultrasound followed by a CT scan, for example) all too common. And insurance companies are putting up barriers, asking doctors to justify their orders to an administrative entity that decides which tests are appropriate. But Dr. Durand and colleagues offer a different approach: medical-imaging stewardship. For this system, which replicates other recent efforts in hospitals that ensure appropriate use of antibiotics, they call for imaging experts (e.g., a radiologist versed in best practices) to work directly with clinicians to help decide when a test will be most helpful, and to select the right test. With the computerization of medicine well under way, the authors note that computer-based decision aids can help physicians make better choices, and imaging experts can step in when pre-determined algorithms are not applicable.

The goal of such a stewardship program is to let evidence and peer feedback drive more appropriate use of imaging. It's a worthy goal. But will it reduce unnecessary tests while also ensuring appropriate, often life-saving, uses of these technologies? The jury is still out. This approach has worked well for promoting more appropriate use of antibiotics. But changing clinician behavior around diagnostic tests is hard. Many physicians are used to certain diagnostic algorithms and breaking those habits is challenging. More importantly, many patients don't fit into an algorithm and require a customized approach. Unless the imaging expert has substantial clinical credibility, it is unlikely that physician practices will change to satisfy organizational guidelines.

Improvements in diagnostic imaging represent one of the biggest areas of clinical progress over the past half century. But all progress has costs. As we think about how to ensure that patients reap the benefits of these advances and avoid the harms of over-testing, imaging stewardship — clinicians being guided by evidence-based algorithms and imaging experts — will become increasingly important. It may not be a cure-all, but it'll be an important part of how we deliver the right care for all patients.

Disclaimer:

As a service to our readers, Harvard Health Publishing provides access to our library of archived content. Please note the date of last review or update on all articles.

No content on this site, regardless of date, should ever be used as a substitute for direct medical advice from your doctor or other qualified clinician.